Deep Fake is nothing new. What is new, however, is the quality of the fakes. In the old days, letters could be forged, but it took great skill to do it well and people were very alert to the danger. Then cameras came along and, for a while, they were perceived as the ultimate in reliability, hence the saying “the camera never lies”.

However, humans do lie and modern technology is helping to take the ancient art and science of lying to a whole new level.

The issue of deep fakes

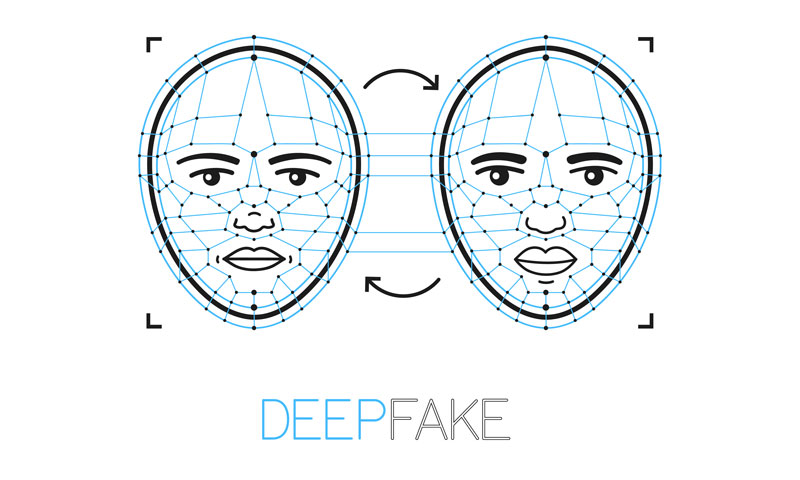

Deep fakes are what you get when high-quality images and/or audio meet superpowered editing powered by artificial intelligence. These days, even budget-level smartphones can record HD videos and upload them straight to YouTube. In fact, they can upload them to any of the other main social-media. All of which, are eager to steal YouTube’s crown as the internet’s video hub. This means that there’s plenty of raw material for deep-fake creators to use.

Similarly, there’s no shortage of decent, video-editing, some of which is available for free. Admittedly, for true deep fakes, you’re going to want something much better than the basic programmes. These will let you tidy up videos before posting them to YouTube.

Throw in artificial intelligence, which is now everywhere, and you can add the capability to predict and model someone’s behaviour. For example, their speech patterns and habitual body language. In other words, given enough genuine video footage of a person, you can create believable fake footage of them doing just about anything.

From porn to politics

As is often the case with technology, deep fakes appear to have started off in the world of porn. Celebrities, generally women, found their heads pasted onto other people’s bodies. Again, there is nothing new in this. What was new, however, was just how well it was done.

It was done so well, in fact, that major alarm bells started to ring. If this could be done in porn, then why not politics, or policing. Now that facial-recognition software is becoming increasingly mainstream… Deep fake creators could make your favourite politician, or actor, do or say practically anything. In a way that is increasingly difficult to spot as a fake.

How to counter deep fake videos?

Initial efforts to counter deep fakes largely focused on analysing existing videos. The problem with this approach is that, by definition, it can only be used after a video is released. Some companies, therefore, decided to turn the situation around and focus on developing ways for people to identify their own “genuine” videos. Meaning, that anything without that mark could be assumed to be a forgery.

While various approaches are being trialled, the general consensus appears to be that the best approach would be to combine blockchain with some kind of secondary verification such as a tag or graphic. In other words, to create the 21st-century digital equivalent of a fingerprint stuck into the wax used to seal a document closed. To verify police body camera videos, company Axiom is using blockchain to fingerprint videos in the physical device where the recording was made (i.e. the body cam).

The next generation of authentication technology, such as “DeepDetector” from DuckDuckGoose , use a deep learning network designed and trained to recognise AI-generated or AI-manipulated content. It is adept at detecting AI-generated content, making it an essential asset in the fight against deepfakes.